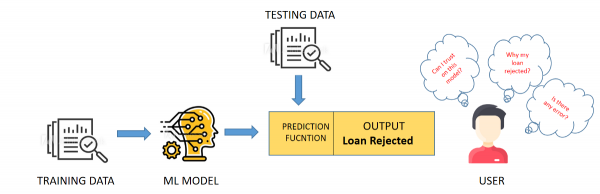

Artificial intelligence (AI) is rapidly evolving, and its use is becoming more prevalent in our daily lives. From chatbots to autonomous cars, AI systems are making decisions that affect us all. However, the way these systems arrive at their decisions can often be a mystery, leading to concerns about transparency, accountability, and fairness. Explainable AI, or XAI, is an emerging field that aims to address this issue by making AI decisions more transparent and understandable.

At its core, XAI is concerned with making AI decisions more transparent and interpretable. By understanding how an AI system arrives at its conclusions, we can better assess its accuracy, reliability, and potential biases. This is particularly important in fields such as healthcare and criminal justice, where AI systems are increasingly being used to make critical decisions that have a direct impact on people's lives.

Challenges

One of the main challenges with XAI is finding ways to make complex AI models more transparent and interpretable. This requires new techniques and tools for visualizing and explaining machine learning models. For example, researchers are developing methods for generating heat maps that highlight the areas of an image that an AI system uses to make its predictions. Other approaches involve creating decision trees or rule sets that show the sequence of decisions that an AI system makes.

Advantages

There are several advantages to using XAI in AI systems. For one, it can help to build trust in AI technologies by providing more transparency and accountability. This, in turn, can lead to increased adoption of AI systems in critical areas such as healthcare and finance. Additionally, XAI can help to identify and mitigate biases in AI systems, which can be a significant concern in fields such as criminal justice.

However, XAI is not without its challenges. One of the main challenges is striking a balance between transparency and accuracy. In some cases, making an AI system more interpretable can come at the cost of accuracy, as complex models may need to be simplified. Additionally, XAI can be a resource-intensive process, requiring significant computational power and specialized expertise.

XAI Tools and FrameWorks

There are several XAI tools available that help to achieve these goals.

1-Lime

Lime is a popular XAI tool that can explain the predictions of any machine learning model. It works by generating samples around the input data and training an interpretable model on the samples to explain the original model's behavior. Lime can help to identify the most important features that contributed to a model's decision and provide an explanation in plain language that is easy to understand.

2-SHAP

SHAP (SHapley Additive exPlanations) is an XAI tool that provides an explanation for the output of any machine learning model. It uses Shapley values, which are a method for assigning credit to each feature for the final prediction. SHAP can provide a global explanation of the model, showing how different features interact with each other, as well as a local explanation of a particular instance, explaining why a certain prediction was made.

3-Captum

Captum is another popular XAI tool that is designed to help debug and interpret deep neural networks. It provides a variety of algorithms for attributing the output of the network to the input features, including layer-wise relevance propagation, deep lift, and integrated gradients. Captum also provides visualization tools to help users better understand the attribution results.

4-Skater

Skater is a Python library that provides tools for explaining the predictions of any machine learning model, including decision trees, random forests, and deep neural networks. It can provide both local and global explanations, and allows users to visualize the feature importance for each prediction.

5-Alibi

Alibi is an open-source XAI tool that provides several algorithms for explaining the output of machine learning models. It includes techniques such as anchors, counterfactuals, and prototypes, and allows users to generate explanations for individual predictions as well as for the overall behavior of the model.

Conclusion

In conclusion, Explainable AI is an important field that is rapidly gaining attention as AI systems become more prevalent in our lives. By making AI decisions more transparent and interpretable, XAI can help to build trust in AI technologies and ensure that they are used responsibly and ethically. While there are challenges to be addressed, XAI represents an important step towards creating AI systems that are more accountable and fair.